Reimagining Memories

In the age of generative AI, our memories are no longer confined to fading photographs or fragmented recollections. Generative AI models have made significant strides in transforming text prompts into vivid images and videos. Google’s Veo 3, for instance, allows users to create high-quality videos complete with sound effects and ambient noise, offering a new dimension to storytelling .

Similarly, OpenAI’s Sora can generate videos up to a minute long, maintaining visual quality and adherence to user prompts.

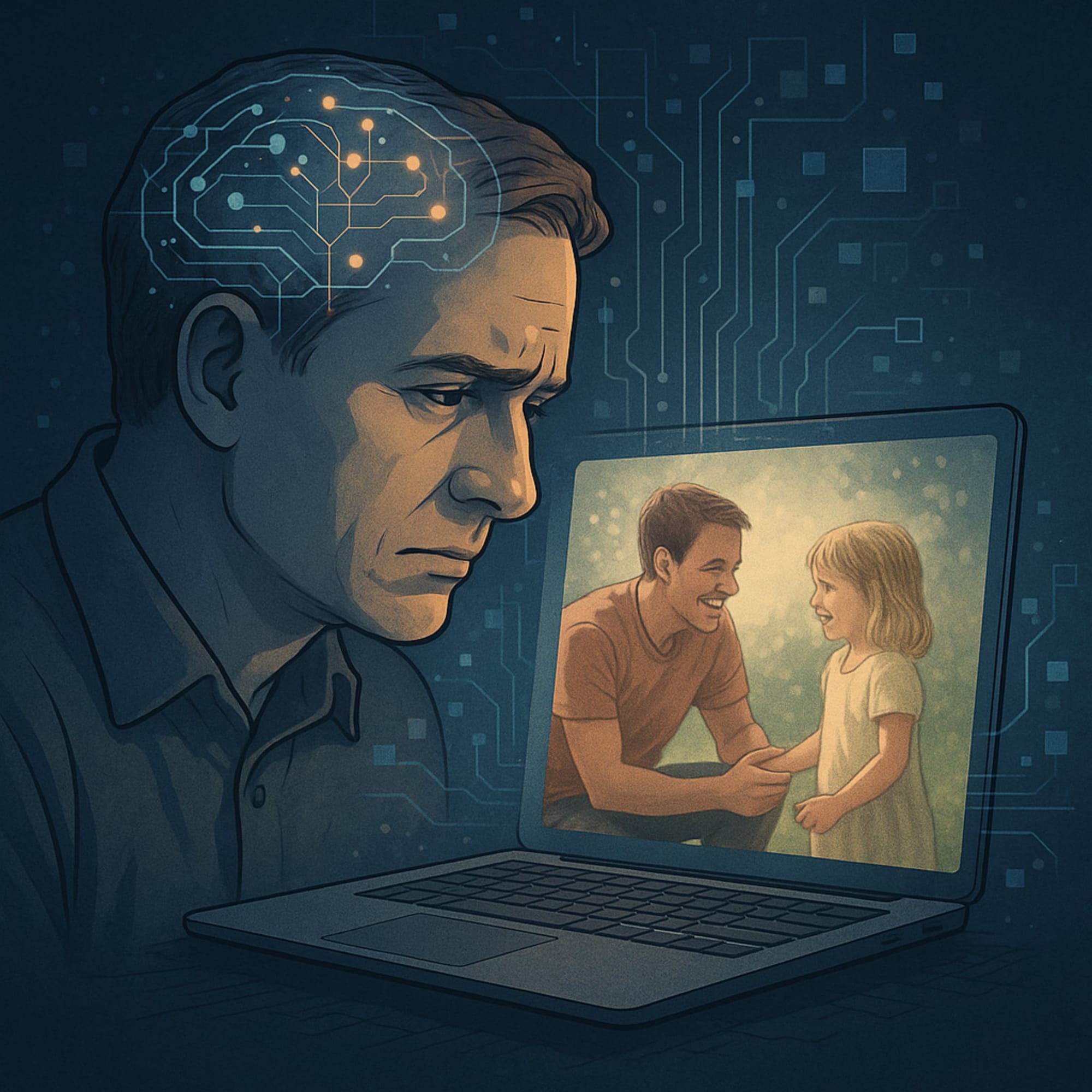

These tools not only cater to filmmakers and content creators but also open avenues for individuals to recreate their own personal memories. Imagine animating a cherished childhood photo or visualizing a family gathering that was never captured on camera. Projects like Synthetic Memories aim to recover lost or undocumented visual memories using AI, bridging the gap between imagination and reality .

The Psychological Costs of AI-Fabricated Memories

While the technology is promising, it’s essential to consider the psychological ramifications of using AI to fabricate or extend personal memories through images and videos.

Memory Distortion and the Misinformation Effect

Memory is already prone to distortion, through things like gist based and associative memory errors. AI-generated content can blur the lines between actual memories and fabricated ones. This phenomenon, known as the misinformation effect, occurs when individuals incorporate misleading information into their memory of an event. For instance, viewing an AI-generated video of a childhood event might lead someone to believe it genuinely occurred, even if it didn’t. Such distortions could have profound implications, especially in contexts like eyewitness testimonies or personal recollections of a situation where the facts need to be recollected.

Erosion of Trust in Visual Media

The proliferation of deepfakes and AI-generated videos challenges our ability to trust visual content. As these technologies become more sophisticated, distinguishing between genuine footage and fabricated content becomes increasingly difficult. This erosion of trust can lead to skepticism towards all visual media, undermining the credibility of legitimate sources and potentially fueling misinformation.

Emotional and Psychological Impact

Engaging with AI-fabricated memories can have emotional consequences. Recreating moments with lost loved ones or revisiting traumatic events through AI can evoke intense emotions, potentially leading to distress or exacerbating grief. Moreover, the knowledge that a cherished memory has been artificially constructed might diminish its perceived authenticity, leading to feelings of emptiness or disillusionment.

Identity and Self-Perception Issues

AI-generated content can also impact our sense of self. For example, seeing a deepfake of oneself engaging in actions never performed can lead to identity confusion or distress. At its worst case, the manipulation of one’s image without consent raises ethical concerns and can have lasting psychological effects, including anxiety and diminished self-esteem.

Societal Implications and the “Truth Crisis”

On a broader scale, the widespread use of AI to fabricate memories can contribute to a societal “truth crisis.” As fabricated content becomes indistinguishable from reality, collective agreement on facts and historical events may erode. This fragmentation can hinder social cohesion, fuel polarization, and challenge the foundations of informed decision-making in democratic societies .

Navigating the Future

While AI offers remarkable tools for preserving and revisiting memories, it’s crucial to approach these technologies with caution. Establishing ethical guidelines, promoting media literacy, and fostering open dialogues about the implications of AI-generated content are essential steps in mitigating potential psychological harms.

As we navigate this new frontier, it’s imperative to balance the benefits of AI-enhanced memories with the potential costs to our psychological well-being and societal trust.