Vibe Coding with GPT-5

I used GPT-5 & Cursor to do some vibe coding and built an iOS App

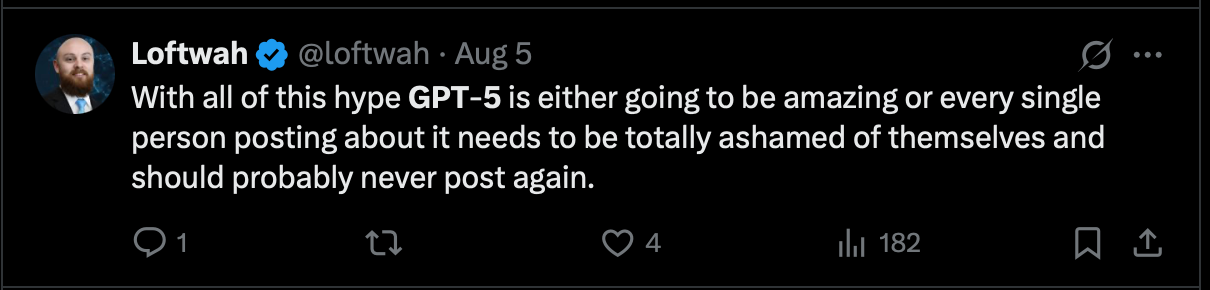

Didn’t you have high expectations for the GPT-5 model? I think a lot of people did including ME!

Lucky for us, Cursor enabled free access to it for a week so I decided to put it through its paces and add some features to an iPhone app I was vibe coding

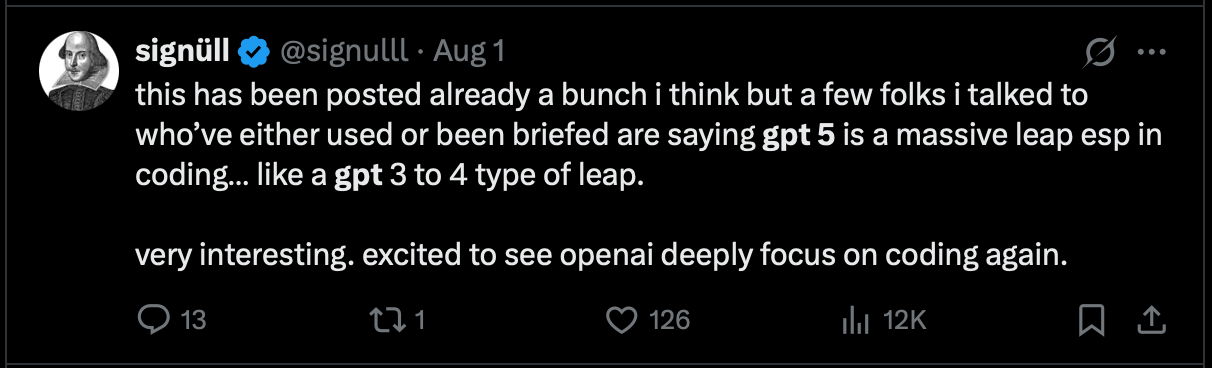

If you didn’t know OpenAI AI released their next generation of general purpose models recently, the hotly anticipated GPT-5.. You can’t blame me for having high expectations, especially given this blog post from Sam earlier in the year and then this X post the day before

So when it came out, I eagerly watched the keynote (start to finish ) and was amazed by the demos, bemused by the charts and came away from it thinking, it’s not AGI but it did seem like progress.

In particular the demo’s of using it for writing code looked pretty cool. You could see that it was vying for the spot of being the model of choice for my vibe coding sessions. It really piqued my interest when OpenAI had the CEO of what is arguably one of the hottest tool for programmers right now on stage talking about how great it was.

After the keynote when I found out that Cursor were giving free access to GPT-5 for a week, I had to get stuck in and try to make something with it.

Unrelated, I vibe coded an iPhone app for the commuter train I take. If you live in New Jersey and work in New York, you probably know how stressful a journey (excuse the pun) it can be. Especially this summer, there was even a fire!!

So I wondered whether GPT-5 could help me with relaxing during my commute.

What if I asked it to add some features to that app. Think mini games, breathing exercises, some meditative sounds, all while showing me the train status.

After a few days of vibe coding I present to you, The updated Path Train Arrivals App! Complete with a breathing exercise, a relaxing garden mini game and some ambient sounds

Thanks to all the folks who make music and upload it to Pixabay. Here’s a link to all the sounds I used in the app.

So here’s my experience of vibe coding with GPT-5 in Cursor.

High Level Stats

- Days of Vibe Coding: 3 ( evenings )

- Time taken : around 11 Hours

- Total Tokens : around 19M

- Est OpenAI API Cost: $12.50*

*I was curious if I was paying for GPT-5, How much would my vibe coding have cost me, So I calculated using OpenAI list prices. Check out the Google Sheet I made with an export from Cursor if you want to see the details.

If you have read this far, you probably want to know what my first prompt to GPT-5 was.

“You should read the whole project to understand it”

89 seconds later, it responded with

I completed a full read-through of the project and traced app flow, networking, models, and UI composition. Below is a concise overview and a few high-impact issues I noticed.

It made no code changes, but some analysis. Bear in mind the app was building perfectly fine, had no errors and even passed Apple’s public beta review process prior to this so of course I was curious to know what it found.

So my next prompt to it was

”I want you to explain each issue you found in more detail to me”

35 Seconds later, I got back a summarized list of ‘What’, ‘Why it matters’, ‘Impact’ and Fix. Interestingly it was giving me options for fixes with if statements depending on it’s assumptions.

So time to make a new Git branch.

Tip: If you are just getting started with vibe coding. Git is your friend! Making regular commits and asking for changes step by step ensuring things build for each change will save you a lot of time and frustration!

So anyway, we went step by step to address the issues GPT-5 found with my app that I agreed with and several hours later, I think we were ready for the real reason I wanted to vibe code with it.

So here was my prompt

”Ok now i want to do something drastically different. The path train is often late or delayed. I want to make the stations view more colorful and fun and it shouldn't just show the train details but be inviting like a botanical garden in colors and style and have mini games that users can play while they wait for their train. Maybe some soothing meditative sounds that they can play and pause and also some kind of mini games they can play while they wait. so people are relaxed and chill while they wait for the train”

20 seconds later, I had a new folder, with 3 new views and some pretty clean code although there was issues with layouts and constraints and clipping when I built the app. It did build though. One thing I noticed that Claude didn’t do was that in SwiftUI you can make previews so you don’t have to build your app each time to look at layouts and visual elements and GPT-5 made me previews for 2/3 new views and they loaded correctly. Speeding up my feedback cycle back to the model for more changes.

This then continued for a number of hours over another evening to ‘polish’ up what it had built. I was determined to go back to the model to make changes when I could just have easily made some of the code changes myself for trivial things like constraints in a VStack because I wanted to see if it would realize it’s mistake..it didn’t and it required a bit of back/forth ( less than with Claude in Cursor ) and providing the model with feedback to get something back that looked ‘good enough’.

Here's an example of another prompt I gave the model

“this is a great start but its on the list view of stations and not in the view with the details of the stations can you move it, also you need to adjust the alignments of the views especially the breathe animation as it's off center and the concentric circles escape outside of the view”

I found myself spending more time asking for bug fixes like this than I would have liked. This showed me that this model wasn’t as revolutionary as it looked during launch but rather an evolution. What was interesting was the amount of time it spent thinking and reasoning. Here is an excerpt from the one of the reasoning steps it took

Updating game layout. I need to update the GardenMiniGameView layout based on the request. The Start/Pause button should move into the game area overlay, probably in the bottom-right corner, and I need to keep the Reset button next to the score in header row 2. Previously, the layout had the Start/Pause button and Reset in row 2, but now I'll change it so the Score label is first, followed by a compact Reset button, and then the Best label. I’ll aim for stable constraints during the implementation!Updating button layoutI need to remove the Start/Pause button from the header and place it in the game area overlay as a circular button, probably in the bottom-right corner with a play/pause icon. I think using padding and material background could help it stand out. The user specified it should be on the game view, but bottom-right seems the most intuitive. I’ll also keep the header widths stable while editing the contents of header row 2 to ensure everything flows nicely!Adjusting button layoutI need to revise the button layout in the header. I plan to adjust it to include the Reset button with a bordered style, ensuring it controls the score and high score by resetting them. I will remove the toggleRound button from the header for clarity. In the game’s ZStack, I’ll add a circular button for toggling the round with a play/pause icon. I’ll also make sure to set the correct accessibility labels and remove any outdated references to colors. Let’s implement these changes!

Reflection

When I reflect at the feature I built into my app, the time I put in, and what I got out the other side. I am both amazed and also underwhelmed.

Is it better than Claude or GPT-4, I’m not sure to be honest. It did seem to take a long time to think each time I prompted it to do something and definitely reasoned a lot more before making changes.

Is it amazing that I can just prompt an AI model to build me a feature for an iPhone app and it just chunks up my requests into some math and gives me working code on the other side. YES!

Can I just leave it with a few prompts and not have to spend hours going back / forth for debugging silly things. NO!

I have read that there is work happening from to make GPT-5 work better through Cursor so it does indeed perform better and like it did when OpenAI did their demo’s but for now it seems as though it’s an ok pair programmer albeit slow and requires supervision. As with any vibe coding paradigm it does require you to have technical knowledge of the domains you are using the model with to be able to get usable output out of it.

I am considering doing a vibe code off between models for some other features to just see what differences they make. After all the model benchmarks don’t actually mean anything.

If you live in New Jersey and want to use the app I built it's currently in Beta and you can sign up here